How to Do a SEO Site Audit without Google Webmaster Tools and Analytics

Here at Zion & Zion, I perform site audits for all of our prospective interactive clients. In doing these, I have refined the search engine optimization (SEO) Audit process to quickly assess issues with sites without having access to Google Webmaster Tools or Google Analytics. I do this by using a variety of other tools to evaluate the different ranking factors.

Tool Box

Though I have a large variety of tools at my disposal, I have refined this process to use 10 tools. Here is a list of tools and reports I use:

- /Screaming Frog

- All Data Export Report

- All In Links Report

- All Out Links Report

- Images Missing Alt Text Report

- Redirect Chains Report

- Canonical Errors Report

- /SEM Rush

- Organic Positions Report

- Site Audit Issues Report

- Ahrefs

- Links Report

- Google Scraper

- Google Mobile Friendly test

- /WebPageTest.org

- /Excel SEOTools Add-in

- Wappalyzer

- GoDaddy WhoIs Tool

Site Background

First, I like to find out as much as I can about the site, so I use the Wappalyzer browser plugin to see what platform the site is built on. Then I look up the /WhoIs information using Godaddy’s tool. This way I can find out who owns the site, where they are located, and check to see if that is the business owner or if some other company runs the site. I also like to find out the age of the site, as this can be important information if the site is not ranking well.

From there I like to see if they are using Google Analytics or Google Tag Manager. If they are using Google Analytics, I look at the last two numbers after the dash. If it is a high number, then I can assume an agency is probably running the account for them.

Site Health

The most important thing to find out is if the site is indexed properly. This way I can determine if something has to be fixed before the rest of the site can be optimized. With all the different ranking factors and /Google Penalties, it can be hard to determine what is wrong with a website. I check to see if the site is healthy by looking for:

- Proper indexation

- Rankings

- Drops in traffic

- Backlinks

- Compatibility with mobile devices

Indexation

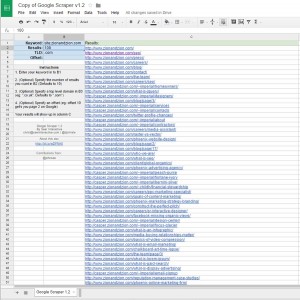

To check the indexation of a website, I use two tools from my toolbox: the Screaming frog All Data Export Report and Google Scraper.

First, I use the scraper tool to pull all the indexed pages of the website using the /site: advanced operator. Then I compare the pages it finds with all the html doc types with 200 status codes in the Screaming frog All Data Export Report. If the numbers are close, then the site appears to be indexed properly. If they are different, then something must be wrong.

When the totals are different, I search through the Google Scraper report to find what is or isn’t being indexed and why. The first thing I do is place the list of indexed URLs into an excel table then use the SEOTools add-in to find the response code for all of the pages indexed. In some cases you will find a lot of 404 pages are indexed, causing the discrepancy between the two files. This can be fixed by using Google Webmaster Tools Remove URL tool.

Another issue may be duplicate indexation due to both http & https rendering the same content. The same issue can occur when multiple subdomains have the same content. The most common is www. and no subdomain. These issues can be resolved with /URL Rewrite Mods in the .htaccess file.

Rankings

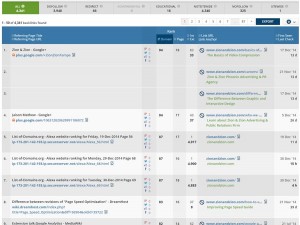

After I check the indexation of a site, I like to see where the site ranks. I use SEM Rush Organic Positions Report to find what terms the site ranks for. A site should rank in the top 3 for its URL and brand terms. If it does not, then something may be wrong.

After looking at how the brand ranks, it is important to see how the site ranks for other terms. This can give you a good indicator of how healthy Google views the page. If a site ranks well for a lot of terms, then you can be pretty sure nothing is wrong with the site. If the site only ranks for brand terms, I then dig deeper to see why the site ranks so poorly. Ranking for brand terms is important, but ranking for other relevant terms is just as important, as it allows more people to find your site organically.

Traffic

I use the SEM Rush Organic Positions Report to display a graph of estimated traffic to the site. In this graph I look for drops in traffic and check to see if they coincide with a /Google Update. If the site does have a drop, or multiple drops, in traffic that happened the same time as a Google Update, I look on the site to find the specific issue the update targeted. If the site does not appear to have been affected by a Google update, I dig a little deeper.

Backlinks

Backlinks are a very important factor in how a website ranks. Both the quality and quantity of these links are important. Links can have a negative effect on your site’s rankings if they do not fall within the Google Quality Guidelines.

I use the Ahrefs Links Report to find all of the links to a site. When a site has little or no links, it will have a very difficult time ranking. If a site has lots of links but is still ranking poorly, I check to see if the links the site has violate the guidelines. I do this by looking for suspicious /TLDs, low domain rank, high link to domain ratio and anchor text percentages.

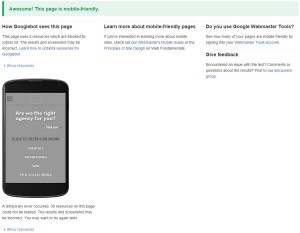

Mobile Friendly

Having a mobile friendly site is becoming more and more important with at least 31% of searches being done on mobile devices. Using the Google Mobile Friendly test, I can tell if search engines find any issues with the website on mobile devices. If there are, these issues should be fixed so that it does not hinder mobile rankings.

Technical Issues

After the health of a site is assessed, next I look for technical issues with the site that may be hindering rankings. I look for issues with the html, robots file, sitemaps and assets.

HTML Issues

The first thing I do to check for on-site issues is run a few reports using Screaming Frog, Data Export Report, All In Links Report, All Out Links Report, Images Missing Alt Text Report, Redirect Chains Report, and Canonical Errors Report.

URLs

Having a good URL structure is important for any site. I like to look for things that may hurt rankings such as multiple subdomains, URLs that are too long, nonsensical directories or file names, use of parameters, and use of keywords.

Broken Links

I use the All In Links Report and All Out Links Report to find broken internal or external links. These reports have the links listed with the source page of the link, the destination page of the link, and the status code with the link. I sort this list by status code to look for /300 (redirection) and /400 errors (Client Error). While internal 300s do get the user to the correct page, it is not best practice to send users to unnecessary redirects. I use the Screaming Frog Redirect Chains Report to make sure I did not miss anything with the other reports.

Images

Images can be optimized in many different ways. I use the webpagetest.org tools to see if images are compressed and /progressive. Then I use the Screaming Frog Images Missing Alt Text Report to find all the images on the site that are missing the /alt attribute. Finally I use the Screaming Frog All Data Export Report to see if any of the images have poor file names or if they are too large (anything over 100kb).

Canonicals

Setting up proper canonicals on every page is an SEO best practice. I use the Screaming frog All Data Export Report to check if all pages are using them. Then I use the Screaming Frog Canonical Errors Report to find any issues with the canonicals.

Meta Elements

I use the Screaming frog All Data Export Report to list all the Meta titles and descriptions. I make sure that there are no duplicates and that they are the correct length. If keywords are present, I note how many there are, if they are the same on every page, and if they are spammy. These are all issues that could lead to poor rankings.

HTML Miscellaneous

I use the Screaming frog All Data Export Report to see if there are multiple H1s on any page and to see if they are unique to each page. A lot of times the name of the site will be the H1 for each page. I also check to see if the H2s are different on every page.

I use the Screaming Frog All Data Export Report to check if pages are /noindex. Some pages, such as archived pages, should be noindex. I check to see what pages are noindexed and if that tag is preventing pages from appearing in the index. Then I check the /Hash column to see if there are any duplicates; if so, there are duplicated pages on the site. Finally I see how many pages have word counts under 500. If it is a large percentage that could be what is hindering rankings.

I use the SEM Rush Site Audit Issues Report to find anything I may have missed. This tool is really good at finding little issues that are easily overlooked, such as hreflang.

Controlling the Bots

Telling search engines where to go and not to go is vital in getting the content you want indexed and served in results. You can use two files to do this: the sitemap.xml and robots.txt files.

Sitemap

The sitemap tells search engines what pages to go to. It is just a list of pages on your site that tell the bots when the page was last updated and how often to check on it. I not only check to see if a sitemap is present, I also make sure the URLs match what has been rendered, as well as checking to make sure the syntax is correct.

Robots.txt

The robots file is very powerful and can deindex a site completely if used incorrectly. Since the robots file can differ from site to site, I use the Robots.txt Checker to check the syntax. I also look to see if it is blocking css or JavaScript files, if directories are blocked that should not be, and if the location of the sitemap is listed.

Page Speed

Site speed is becoming more and more important, especially with the increase in mobile users. There are many different things that can contribute to a slow site. To get a quick overview, I use /WebPageTest.org to find out how long each asset takes to load. This tool also provides a list of improvements. You can use Google Page Speed Insights also, but I find WebPageTest.org to be more comprehensive.

Eye Test

Finally, after I have used all of my tools, I look at the source code of a few pages and look for problems. Things like excessive JavaScript or CSS, unnecessary tables, if micro data is being used and anything else out of the ordinary.

Conclusion

As I go through the audit process, I note things that could be affecting rankings. Then I create a list of these items, prioritizing them based on what I feel is hindering the site from ranking well. Finding the major issues is really important. Every site has something wrong with it so it is important to find what is really affecting the rankings and organic traffic. This is different than finding a list of little things that are not following best practices. Lastly, I present my list of items to the potential or current client in our initial meeting explaining how each issue is affecting their rankings.

If you have any improvements or questions about my process, please feel free to contact me on Twitter.